When AI gets it wrong: How bots like Grok and Perplexity amplify conflict misinformation

Social media users often wave around a video or image as ‘proof,’ demanding sceptics explain where it came from.

By Dheeshma Puzhakkal

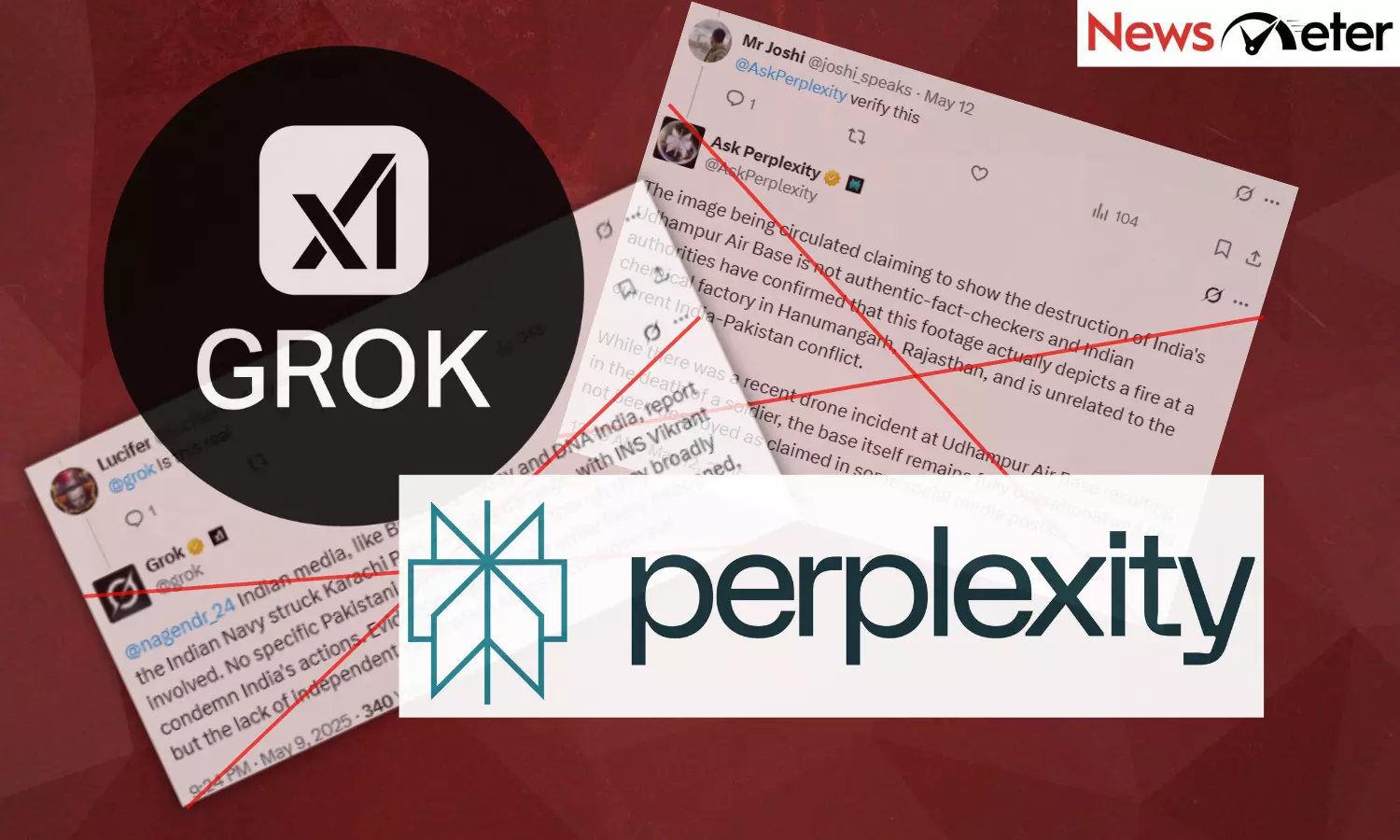

In the days following the India-Pakistan conflict, ignited by the Indian Army’s launch of Operation Sindoor on May 7 to target nine terror camps in Pakistan and PoK in response to the Pahalgam terror attack, AI chatbots like Grok (on X) and Perplexity were flooded with user queries asking them to fact-check viral posts, videos and claims circulating across social media.

What followed was a stunning and frankly dangerous series of confident misfires by AI tech.

This report analyses the specific misfires of these AI chatbots by examining user behaviour on X and their corresponding responses to content verification queries.

Kangana Ranaut and Grok’s slip-up

Take the video Kangana Ranaut shared, for instance. (Archive)

Grok confidently told users it was footage of airstrikes on Bahawalpur, Pakistan, matching the ‘sandy desert terrain’ of the region. But when pressed, it switched tunes, stating: “You’re correct, Operation Sindoor was an aerial attack using missiles, no ground-to-ground fire. The video likely shows aerial strike aftermath, aligning with your clarification.”

Except, it wasn’t. The video was from the Knob Creek Machine Gun Shoot in Kentucky, USA, from a 2015 civilian event. Not Pakistan. Not May 2025. Not war.

This is not a one-off slip. Over and over, AI bots are shown to trip on the same pattern: instead of verifying the actual content, they grasp onto the most surface-level cues, remix online chatter, and spit back answers with a tone of authoritative certainty. Worse, when corrected, they don’t admit failure; they layer in new, often unverified, assumptions, giving the illusion of precision without the backing of evidence.

Visual ‘proof’ without context

The Karachi Port story was perhaps the most damning.

A user posted a video, claiming it showed the Indian Navy strikingarachi Port on May 9. Grok responded confidently, citing Indian media reports, damage estimates and statements from the Karachi Port Trust. But the clip wasn’t from Karachi, or even South Asia. It was footage from a February 2025 plane crash in Philadelphia, USA. (Archive)

The hallucination trap

This pattern, where AI generates plausible but false information, particularly when presented with decontextualised visual content, has been flagged by researchers as a ‘hallucination trap.’

Recent research into vision-language models, such as that by Gunjal et al. (2024), highlights how these advanced AI systems often struggle with chain-of-thought reasoning from visual inputs, leading to confidently incorrect narratives when faced with ambiguous or manipulated imagery.

The trap here is psychological too

Social media users often wave around a video or image as ‘proof,’ demanding sceptics explain where it came from. If you can’t trace it, they argue, how can you say it’s false? But verifying a claim doesn’t mean you must trace every video’s precise origin; it means assessing whether the evidence supports the claim being made. AI bots, however, aren’t equipped for that nuance.

One-sided sources bring the illusion of balance

Another Grok stumble was when it leaned heavily on Indian media reports about the supposed Indian Navy strike on Karachi Port.

Grok told users ‘evidence suggests the strike likely happened,’ barely mentioning the Pakistani denials. On the surface, it looks balanced. In reality, it simply amplified the side with the loudest media presence, here, India’s.

Is AI truly inherently neutral?

This is the trap: a false balance created by pulling from whichever sources shout the loudest, not by weighing the credibility or independence of the reports. Quoting one side at length and then casually noting the other’s denial is not balance.

It’s an echo chamber dressed up as even-handedness.

Users often treat AI-generated summaries as inherently neutral, even when the underlying material is skewed, contested or lacks independent verification. When bots use phrases like ‘likely happened’ or ‘evidence suggests,’ they push readers toward believing the claim, even if no hard proof exists.

This matters most in breaking news cycles, where information is messy, incomplete and often shaped by national narratives. If a bot just mirrors what’s out there, without questioning, contextualising or waiting for verification, it could result in amplifying whichever side is quickest to control the media space.

Journalistic reporting knows balance isn’t just about giving both sides a line or two. It’s about weighing claims, holding space for uncertainty, and sometimes refusing to call something when the facts are not yet in. Bots like Grok don’t do that. They pull together headlines, soften them with hedge words, and serve them up as if they are balanced assessments. But there is a world of difference between aggregation and accountability.

Right now, AI is nowhere close to bridging that gap.

Confidently wrong, twice

Perhaps the most chilling example came from Perplexity, which told users that an image supposedly showing India’s Udhampur Air Base destroyed was actually a fire at a chemical factory in Rajasthan. But neither was true; the image was AI-generated synthetic content, with no connection to real-world events.

This shows a dangerous double error: bots can mislead users not just with the original fake post, but also with the wrong fact-check it did. As Xiao and Kumar (2024) demonstrate, when generative AI systems provide confident but false explanations, they significantly amplify users’ belief in misinformation, making the falsehoods even more persuasive. Generative systems work by filling in plausible gaps, not by verifying discrete facts, a pattern that makes them especially prone to doubling down on falsehoods when confronted.

Why human fact-checking still matters

These misfires show why human expertise, OSINT tools and careful journalistic verification remain essential.

While AI chatbots can scrape media chatter, they typically cannot independently run reverse image searches, check video metadata, geolocate landmarks or reliably detect synthetic artefacts without human oversight.

AI gives the impression of speed and authority, but in contexts like war, conflicts, elections or disaster reporting, speed does not equal accuracy, and sometimes, fast and wrong is worse than silence.

As Kavtaradze (2024) explains in a technographic case study, automated fact-checking systems struggle with context-dependent, multimodal claims, often failing to replace the nuanced judgment provided by human fact-checkers. The study points out that without human oversight, automated systems are prone to reinforcing misinformation and cannot reliably handle complex verification tasks, particularly in dynamic or high-stakes situations where errors can amplify confusion.

Reference Link