The rise of AI-generated content: How Indian personalities were targeted in 2024

The aim behind making deepfakes or other manipulated content is to convincingly make it look like an industry expert endorsed a dubious venture or product.

By Md Mahfooz Alam

Hyderabad: Indian public personalities increasingly find themselves becoming subjects of AI-generated/manipulated content designed to deceive, misinform and exploit the credibility that comes with their popularity.

The rise of deepfake videos, voice cloning and manipulated visuals has sparked widespread concern. In 2024, these malicious campaigns targeted several prominent personalities, including politicians, business leaders, journalists and actors. The aim behind making deepfakes or other manipulated content is to convincingly make it look like an industry expert endorsed a dubious venture or product and attract investments from the common public.

This article explores data on the most popular instances of public figures becoming a target of AI-generated content in 2024. The information is sourced from fact-checks conducted by NewsMeter and other IFCN-affiliated organisations in India. Our analysis covers the 46 most viral instances of AI-generated misinformation involving 39 prominent public figures in 2024. While there may be additional cases, this article focuses exclusively on 46 documented instances.

Who are the targets?

The following chart reveals the extent of AI-generated content posing as important persons across professions (politics, media, sports etc).

Prominent public figures who featured in these deepfakes include Finance Minister Nirmala Sitharaman, actor Shah Rukh Khan, cricketer Virat Kohli and business tycoon Mukesh Ambani, among thirty-five others.

Types of attacks using Artificial Intelligence

Malicious AI-generated content was primarily used to perpetrate fraudulent financial schemes (FFS), health misinformation (HM), political misinformation and disinformation (PM/D), and entertainment gaming apps (GA). Deepfakes and voice cloning falsely linked public figures to fraudulent schemes in 20 instances, health misinformation (10 times), political content (8 times), unauthorised gaming platforms (8 times) and other dubious financial projects.

Fraudulent Financial Schemes (FFS)

These schemes featured AI-generated content that falsely involved public figures in fraudulent financial ventures, including investment scams, crypto schemes and gaming apps.

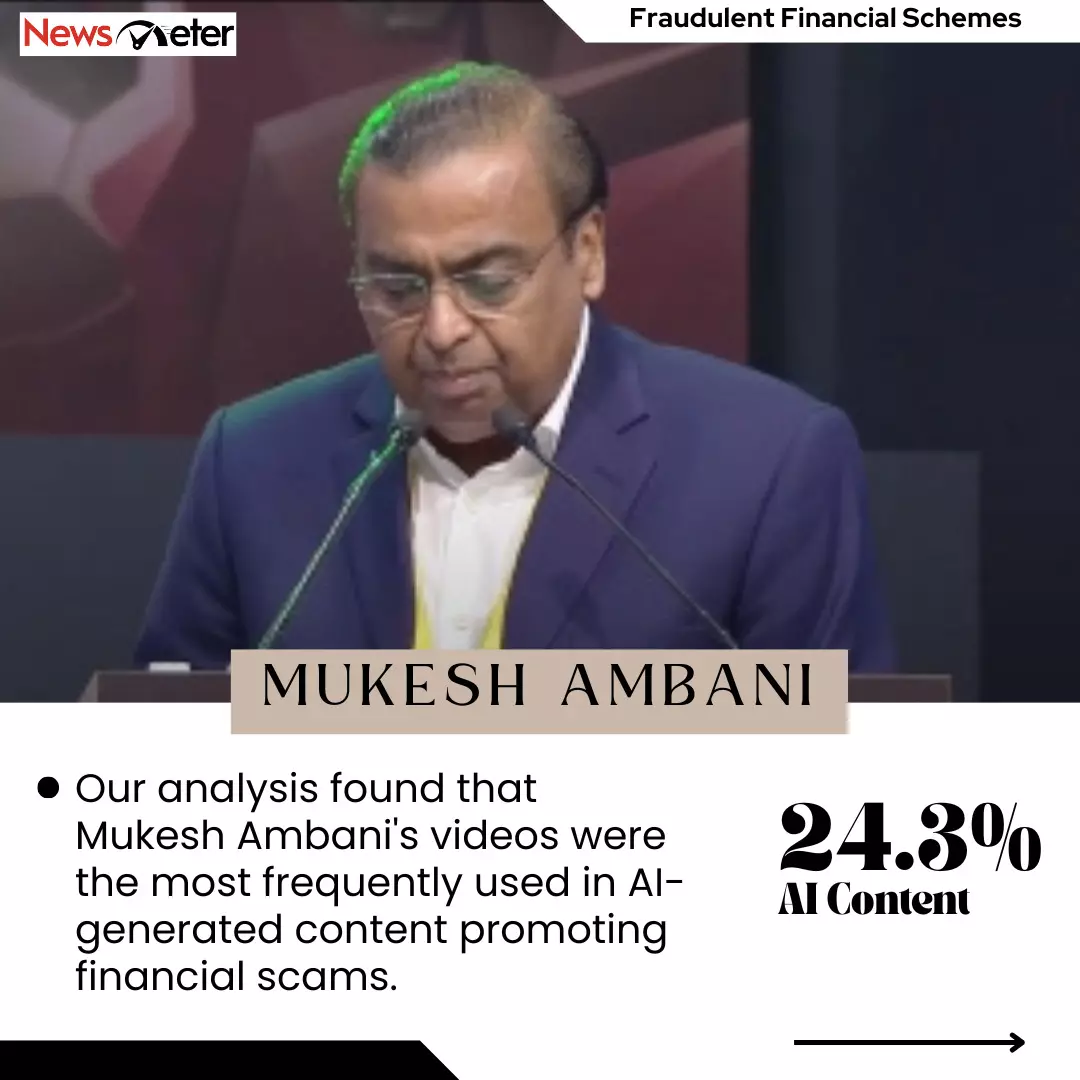

Our analysis found that Mukesh Ambani's videos were the most frequently used (24.3%) in AI-generated content promoting financial scams. For instance, in March, a deepfake featured Ambani promoting a get-rich-quick scheme. In the video, an AI-manipulated Ambani urged viewers to follow his ‘student’ Veenit on social media for free investment advice for multiplying wealth.

In another incident in November, Mukesh Ambani was paired with Bharti Enterprises founder Sunil Mittal in a digitally altered video, where AI-generated audio was used to make it sound like they were promoting a new automated investment platform they supposedly launched.

Finance Minister Nirmala Sitharaman has also been a victim of this trend in at least 8.1 per cent of the instances we analysed. One of her deepfakes falsely showed her peddling crypto trading and dubious financial advice.

A particularly notable case in November involved a viral video where Sitharaman was seen ‘endorsing’ an investment app that can quadruple investments. The video also featured Reserve Bank of India (RBI) Governor Shaktikanta Das, whose digital imprint ‘reassured’ people about the app’s safety and financial returns.

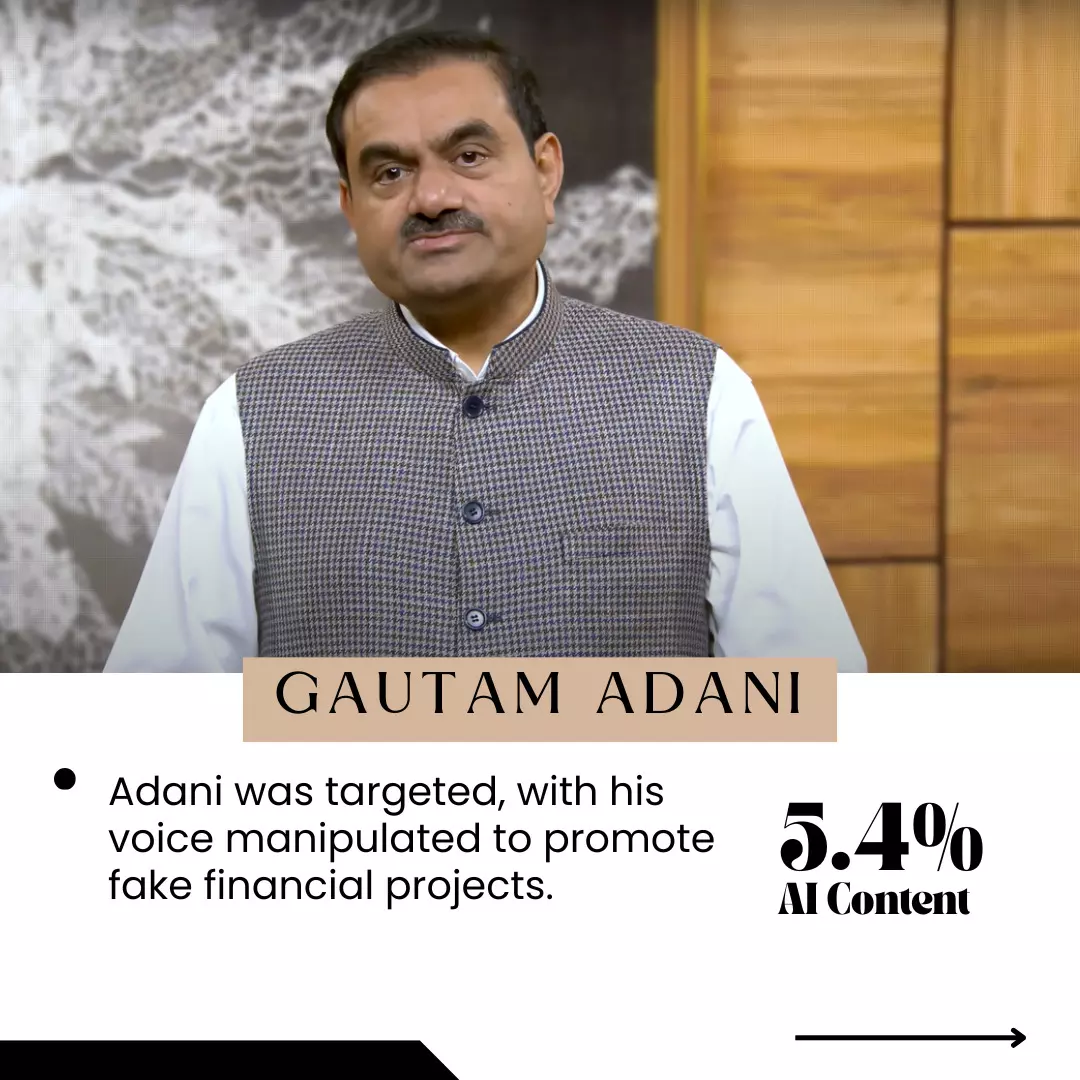

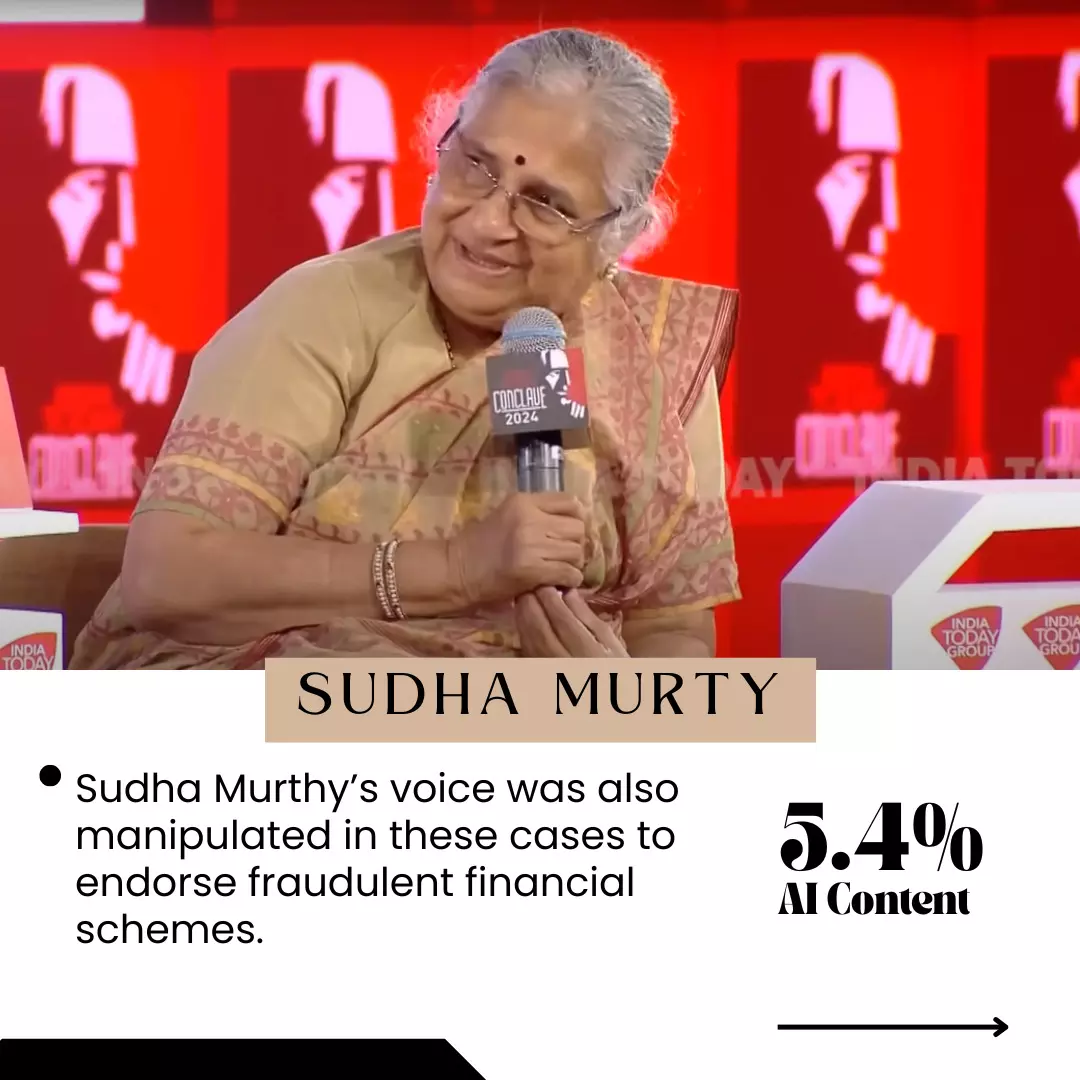

Figures like Gautam Adani and Sudha Murthy were similarly targeted in at least 5.4 per cent of the cases we analysed, with their voices manipulated to promote fake financial projects. Manmohan Singh’s deepfake was also created at least once and it was used to promote a fake investment platform. These videos, manipulated using synthetic audio, leverage the authority and trust these figures command to make fraudulent platforms appear legitimate.

Health Misinformation (HM)

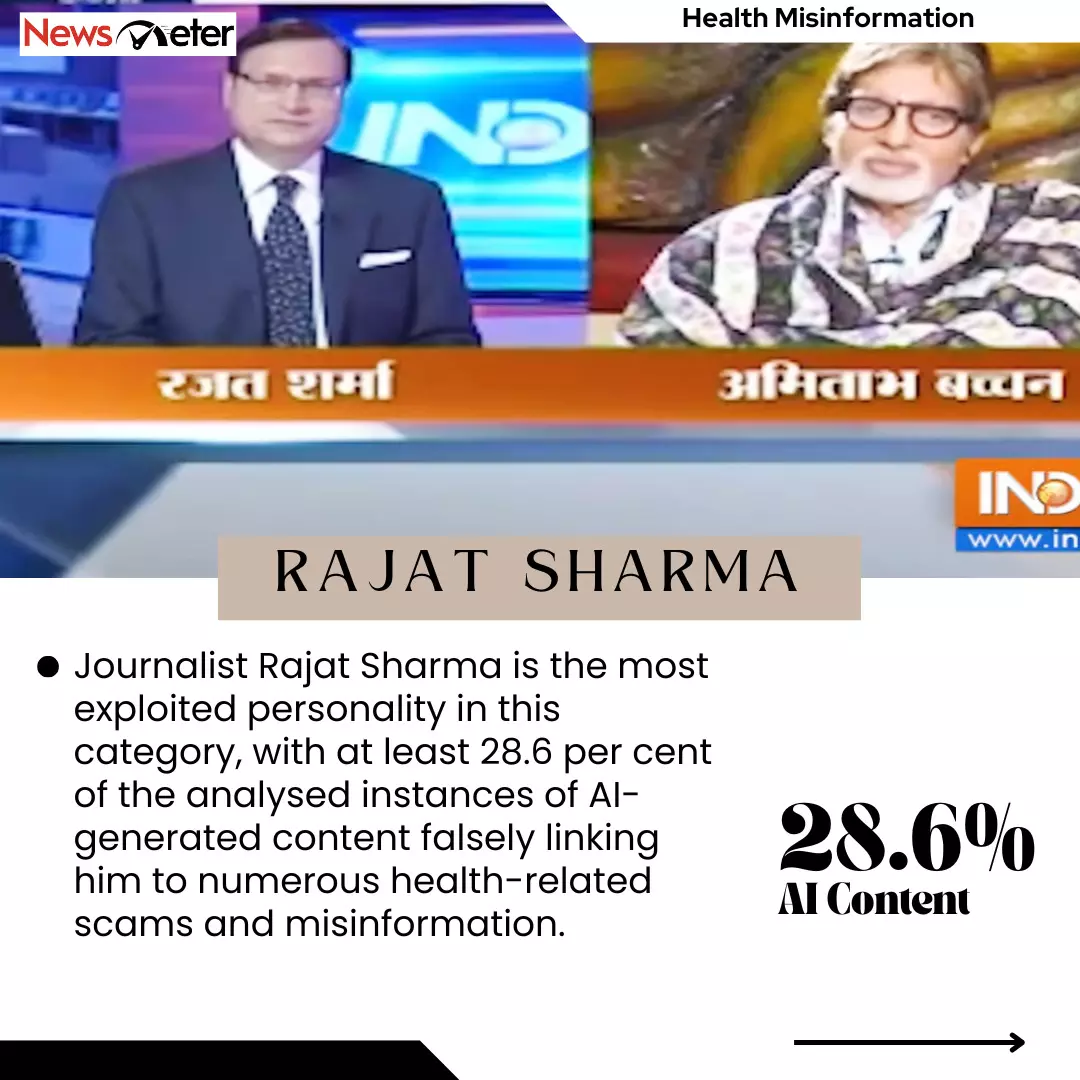

Journalist Rajat Sharma is the most exploited personality in this category, with at least 28.6 per cent of the analysed instances of AI-generated content falsely linking him to numerous health-related scams and misinformation.

Rajat Sharma’s deepfakes had him promoting fraudulent treatments for diabetes, joint pains and vision loss. In October, Rajat Sharma and veteran Bollywood actor Amitabh Bachchan were seen in a manipulated video with synthetic audio, where they ‘discussed’ a miraculous cure for joint pains.

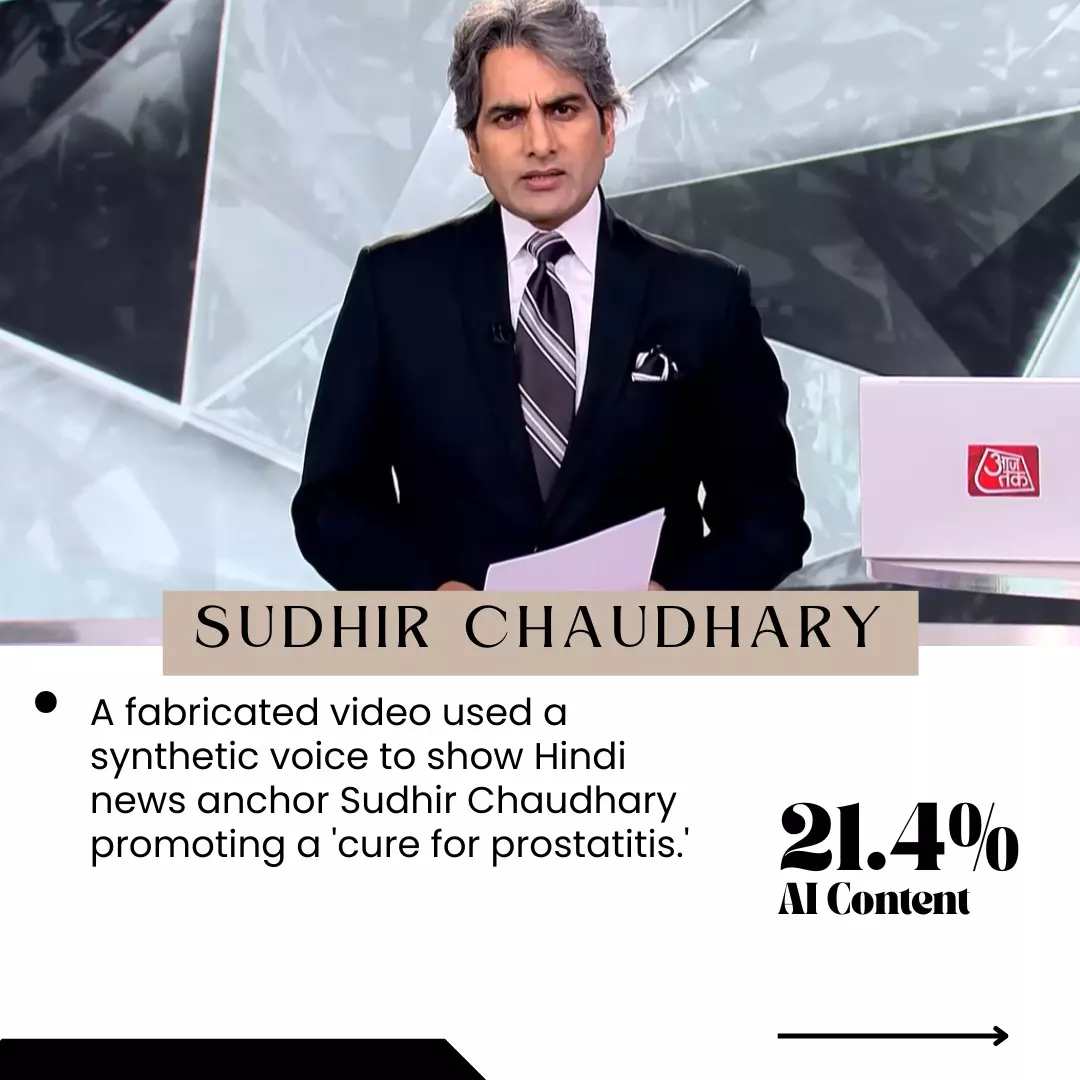

In November, another fabricated video featuring a synthetic voice showed Hindi news anchor Sudhir Chaudhary and cardiac surgeon Dr Naresh Trehan promoting a government-endorsed 'cure for prostatitis'. Chaudhary was featured in at least 21.4 per cent of the fabricated videos we checked. In December, Rajat Sharma, Ravish Kumar and eye specialist Dr Rahil Chaudhary were featured in several AI-manipulated videos, promoting a ‘special drink’ as a solution for eye-related issues. None of these videos are real.

Popular news anchors like Shweta Tripathi and Anjana Om Kashyap were also victims of AI-generated deepfakes at least once. In October, a deepfake video of Anjana Om Kashyap and Dr Rahil Chaudhary went viral. It convincingly but falsely showed them endorsing a ‘home remedy’ capable of improving eyesight.

Additionally, in this category, deepfakes were created from videos of several doctors, including Dr Bimal Chhajer, Dr Devi Shetty and Dr Deepak Chopra from the University of California at least in one instance each, to spread misleading health information as credible health hacks.

Political Misinformation and Disinformation (PM/D)

Being an election year, Indian political leaders were targeted with AI-generated content to manipulate public perception and mislead voters.

Most recently, during the Maharashtra Assembly elections, AI-generated audio clippings of NCP leader Supriya Sule and Congress leader Nana Patole were shared by the BJP ahead of voting day. Fake audio was generated to make it sound like Sule was discussing money with a person named ‘Mehta’ in exchange for Bitcoins to fund election campaigns while assuring that any inquiries would be managed once in power. Patole’s real voice was manipulated to make it sound like he was pressuring a ‘Gupta’ for funds.

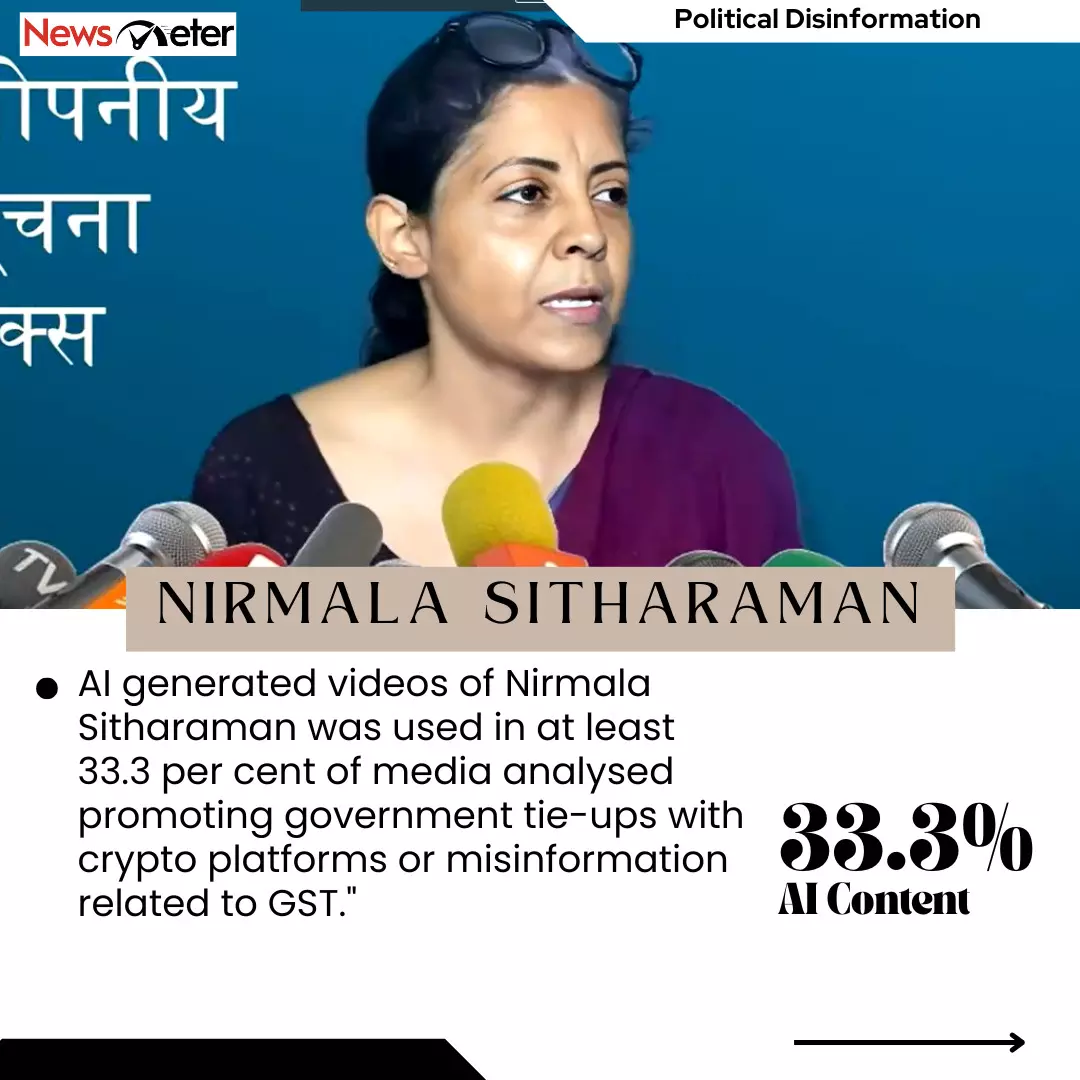

In January, a deepfake featured Finance Minister Nirmala Sitharaman announcing a strategic partnership between the Indian government and a crypto trading platform called Quantum Trade. The video had her saying that the project would be an avenue for wealth creation. Another deepfake of Sitharaman appeared in July in which her face was superimposed on another video which made her look like she was saying that ‘GST is a secret tax’. She was used in misleading AI content in at least 33.3 per cent of media analysed promoting government tie-ups with crypto platforms or misinformation related to GST.

Opposition leaders too were targeted. AI-generated content was used to spread false claims about Congress leaders Kamal Nath in 22.2 per cent of cases and Rahul Gandhi in 11.1 per cent of cases analysed regarding fake resignations and misleading political statements.

Manipulated videos of Bollywood celebrities were also used to spread political disinformation. During the 2024 Lok Sabha elections, a video featuring Aamir Khan in April warned the public against political parties that make empty promises. The manipulated video asked the public to vote for the Congress party. A video of Ranveer Singh was also viral in April criticising Prime Minister Narendra Modi and endorsing Congress. While the video was unaltered, the audio was changed by inserting synthetic voice in some portions. Both Bollywood celebrities' credibility was misused at least once each.

Entertainment Gaming Apps (GA)

AI-generated content in this category frequently leverages deepfakes to associate celebrities with fraudulent gaming apps and online platforms. In 2024, social media was awash with fake endorsements by celebrities for various gaming or entertainment apps, often with the intent to increase app downloads, or scam users into making in-app purchases or providing personal information.

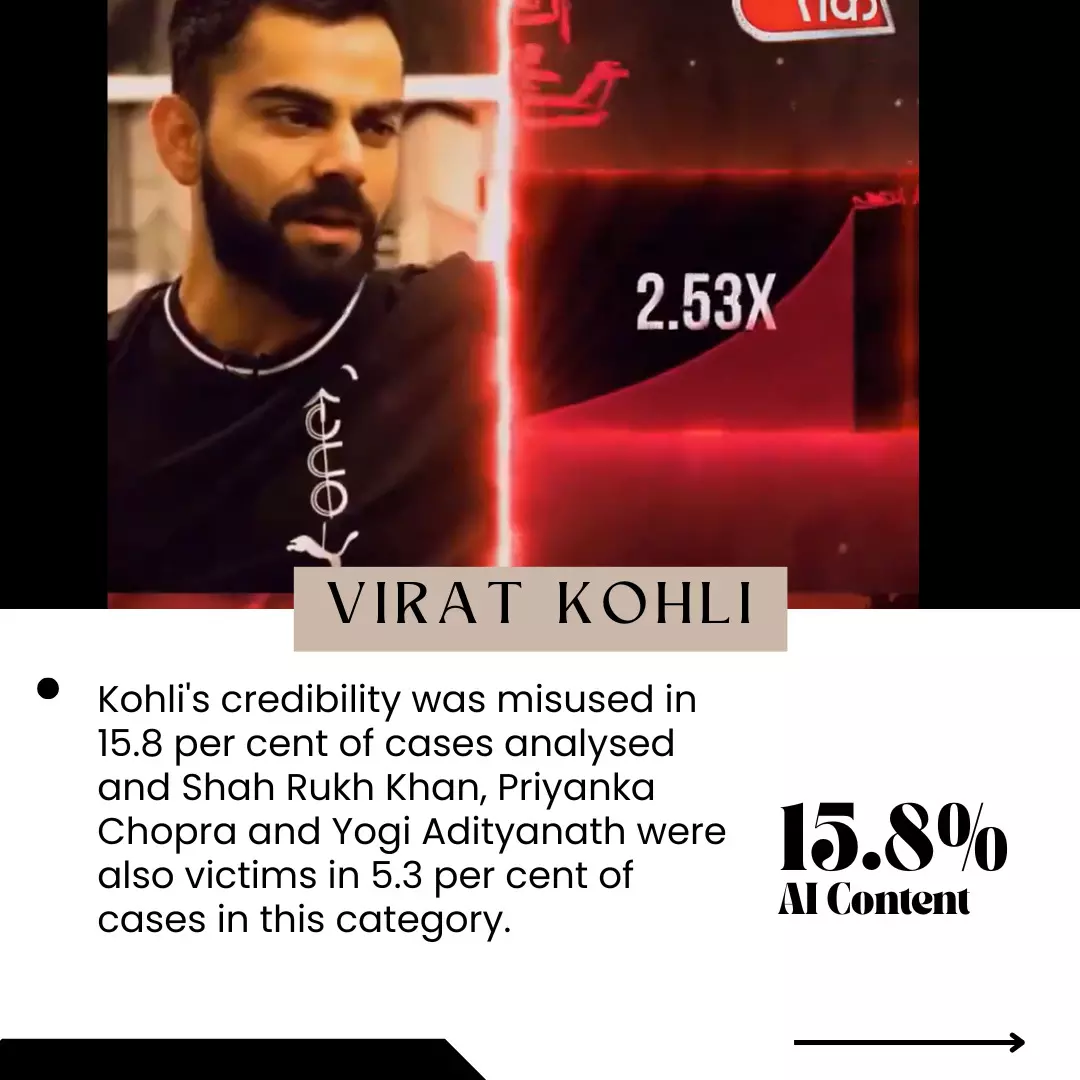

Bollywood actors, politicians, business tycoons, journalists and sportspersons, have been used as conduits of fraud and misinformation. Their physical attributes such as face and voice were used without their consent to promote financial fraud. Voice clones of Virat Kohli, Shah Rukh Khan and Priyanka Chopra were used to promote fake gaming apps, while Uttar Pradesh Chief Minister Yogi Adityanath and Mukesh Ambani also appeared in engineered videos endorsing these platforms.

Kohli's credibility was misused in 15.8 per cent of cases analysed and Shah Rukh Khan, Priyanka Chopra and Yogi Adityanath were also victims in 5.3 per cent of cases in this category. Mukesh Ambani also topped this category with 21.1 per cent of AI-generated content we analysed featuring him.

The widespread use of AI-generated content to deceive the public about financial opportunities and health solutions and mislead voters is a pressing issue that threatens societal trust and integrity. The credibility of public figures is being exploited to manipulate ordinary citizens. The tech companies should work in training their system to prevent their technologies from being used maliciously. It is imperative that the Government of India and other countries work with tech companies on a common framework to curb the misuse of AI-generated content.